Audience

Kubernetes Homelab users, Tailscale users

Introduction

In this post I tell the story of my attempt to replace an existing workable but cumbersome solution for Tailscale traffic routing for my Kubernetes homelab with the simplicity and elegance of the Tailscale Operator for Kubernetes which just went into public beta. Along the way I share learnings about a compatibility gotcha with recent Ubuntu distros including the work-around, as well as a mini tutorial on deploying a private version of the operator from source. I cover both the Kubernetes service incarnation as well as the awesome new L7 ingress capability that was recently merged.

I also recorded a demo that shows the operator working in a k3s-based home cluster running on Raspberry Pis.

Tailscale X Kubernetes

I’ve been deep down the Kubernetes selfhosting rabbit hole for a while now with multiple home clusters running k3s. Outside of learning Linux and Kubernetes, I have been embracing homelabs as part of a strategy to regain control over my digital footprint running home instances of a number of services including links Bitwarden, Home Assistant, Gitea and a private instance of docker hub to name a few. And this is where the interesting union of Kubernetes selfhosting and Tailscale comes about.

The services I host are largely private in the sense that they don’t need to be internet visible but they do need to be reachable from all devices. Which means internet somehow. Putting these services on the tailnet that connects all devices makes them available securely everywhere without the need or risks inherent of exposing them publicly on the internet. It’s a bit like a virtual private intranet.

If you are selfhosting services in another manner such as using Docker on a Synology home NAS, Tailscale is still worth checkout out as these scenarios are natively supported.

The technique I’ve been using to expose services from Kubernetes clusters to my tailnet is one learned from David Bond in in this post from 2020. In David’s approach (simplified here for brevity), each cluster node is individually joined to a tailnet and the cluster itself uses the tailnet for intra-node communication.

Name resolution and ingress come via public Cloudflare DNS entries for inbound traffic secured by Let’s Encrypt for SSL certificates and routed to cluster nodes via the k3s Traefik ingress controller. This approach works but requires multiple services to be installed and configured on the cluster, each node must be on the tailnet and DNS config is manual and external to the home network. Definitely sub-optimal.

If you are a Tailscale user playing with homelab setups would love to hear from you

X TwitterorMastodon.

Tailscale Operator

The Tailscale Operator vastly simplifies this picture by enabling a single extension to be installed into the cluster which then exposes the desired services to the tailnet with simple annotations of existing Kubernetes manifests.

The feature went into preview earlier this year and I got further hyped about it talking to Maisem Ali at Tailscale Up in May 2023 but it was only over the last few days (writing this on Sunday July 30th 2023) that I finally got around to trying the operator out in my setup.

The attraction of the Tailscale operator is that it makes it possible to expose any Kubernetes service running in a selfhosted cluster into a tailnet without the need to install any other cluster components or perform out-of-band config changes.

graph TD

subgraph Internet

vpn[Tailscale tailnet private VPN]

end

subgraph House

pc[PC]

cluster[Kubernetes Cluster]

nas[Synology NAS]

service1[Service 1 Bitwarden]

service2[Service 2 Gitea]

cluster --> service1

cluster --> service2

end

phone[Phone] --> vpn

pc --> vpn

nas --> vpn

laptop[Laptop] --> vpn

service1 --> vpn

service2 --> vpn

In my case it replaces the complexity of building out clusters with Tailscale on every node, and gives simple DNS setup via magic DNS and I don’t need to install another ingress controller. The remaining gap, which is supposedly in the roadmap, is a solution for L7 ingress with built in SSL.

After writing the above in late July, Maisem submitted a patch to add ingress support which fully addresses the above gap. I cover that later in the post

Kicking the tyres

I started off by following the instructions outlined in the Kubernetes Operator kb entry by installing the operator manifest and then created a simple nginx deployment and service

to test it out:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

apiVersion: v1

kind: Namespace

metadata:

name: tailscaletest

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-tailscale

namespace: tailscaletest

labels:

app: nginx-tailscale

spec:

selector:

matchLabels:

app: nginx-tailscale

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx-tailscale

spec:

containers:

- name: nginx-tailscale

image: nginx:1.20-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-tailscale

namespace: tailscaletest

spec:

type: LoadBalancer

loadBalancerClass: tailscale

ports:

- port: 80

selector:

app: nginx-tailscale

The basic premise is that, having installed the operator into the cluster, a Kubernetes service object annotated with loadBalancerClass: tailscale will be detected by the operator and automatically exposed on your tailnet. Only in my case, it didn’t.

A glitch in The Tailnet

Long story short, at the time of writing in July 2023, the operator didn’t work out of the box with my setup.

Testing initially took place on a k3s test cluster running ARM64 Ubuntu 22.04 and I documented my findings in this issue: https://github.com/tailscale/tailscale/issues/8733.

As of October 2023 this issue is resolved in the upstream and latest Tailscale container images. If you are interested in the gory details including how to install the operator into a cluster from source read on, else jump forward to Ingress below.

The short form was that although the Tailscale operator installed fine and was correctly detecting my test service, it wasn’t able to correctly route traffic to the Kubernetes service through my tailnet.

Since I now found myself blocked with the Operator solution, I decided to try some of the other Kubernetes solutions that Tailscale offers. Whilst not as elegant as the operator, both the Proxy and Sidecar approaches can achieve a similar result albeit with increasingly more manual steps. The result of that testing was:

- Tailscale Proxy for Kubernetes doesn’t work

- Tailscale Sidecar for Kubernetes did work

At that point in the journey, of all Tailscale Kubernetes options, only the Sidecar approach worked for my particular configuration. Whilst providing helpful information about the state of the solution space, the Sidecar approach wasn’t going to be a viable solution for my needs so I decided to double click on the two broken cases and try and figure out what was going wrong.

Digging in

Doing some spelunking around in various issues and code, I was able to identify the following relevant issues in the Tailscale repo:

- https://github.com/tailscale/tailscale/issues/8111

- https://github.com/tailscale/tailscale/issues/8244

- https://github.com/tailscale/tailscale/issues/5621 - https://github.com/tailscale/tailscale/issues/391

- https://github.com/tailscale/tailscale/issues/391

- https://unix.stackexchange.com/questions/588998/check-whether-iptables-or-nftables-are-in-use/589006#589006

and from there derive the following learnings:

iptablesprovides firewall and route configuration functionality on Linux. Due to limitations (performance and stability) a more modern alternative callednftableswas developed. More details here: https://linuxhandbook.com/iptables-vs-nftables/- Since only one implementation is installed / active on a host at one time, it is necessary to detect which is running and use appropriate API’s.

- Older versions of Ubuntu such as 20.04 use the

iptablesimplementation where-as 22.04 moved tonftables. - Lack of support for

nftablesin the current Tailscale implementation being a common problem. This impacts tailscale compatibility when running on more recent OS which may default to usingnftablesrather thaniptables. - This is not a new thing. The KubeProxy previously had to accommodate this situation back in 2018 as mentioned in this issue https://github.com/kubernetes/kubernetes/issues/71305

- On the Tailscale side, an

nftablespatch recently landed adding support fornftablesalbeit experimental and behind a tailscaled flag. https://github.com/tailscale/tailscale/pull/8555 - Full support for nfttables in tailscaled including auto-detection is still in progress, not on by default and not available for Kubernetes scenarios.

At the original time of writing, auto-detection and switching for

iptablesandnftableshadn’t been built, it has subsequently landed behind a flag

From the source code

- The code entrypoint for Tailscale Kubernetes operator lives in

operator.go - The operator’s job is to create a Kubernetes statefulset for every service annotated with

type: LoadBalancer,loadBalancerClass: tailscale - The statefulset is instantiated from the docker image

tailscale/tailscalewhich turns out to be the self-same container image as used by the Tailscale Kubernetes Proxy approach. From my testing Proxy was a non working case in my setup. - The

tailscale/tailscaledocker image is essentially a wrapper around backed bytailscaledis configured and run in all container scenarios - The code entrypoint for the

tailscale/tailscaledocker image iscontainerboot.go

Insightful. Based on this, first step was to set about verifying that Ubuntu 22.04 does indeed run on nftables. I duly ssh’d into one of my cluster nodes, ran iptables -v with the following results confirming that 22.04 does indeed run on nftables:

Ubuntu 22.04

1

2

3

4

5

6

7

8

9

james@rapi-c4-n1:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.2 LTS

Release: 22.04

Codename: jammy

james@rapi-c4-n1:~$ iptables -V

iptables v1.8.7 (nf_tables)

james@rapi-c4-n1:~$

Repeating on a 20.04 node, in this case it’s running on iptables:

Ubuntu 20.04

1

2

3

4

5

6

7

8

james@rapi-c1-n1:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.5 LTS

Release: 20.04

Codename: focal

james@rapi-c1-n1:~$ iptables -V

iptables v1.8.4 (legacy)

The overall hypothesis from all of the above research is that my issue is the lack of nftables support in tailscaled is biting me due to the fact I’m running on Ubuntu 22.04 on my cluster nodes which defaults to nftables.

Testing the hypothetical fix

Since it looked like my issue was lack of nftables support out-of-the-box Tailscale and, as luck would have it, experimental support is supposedly there behind a disabled flag, I set about testing this out to see if it could unblock me.

The approach was:

The approach was:

- forcing on the

TS_DEBUG_USE_NETLINK_NFTABLESflag inwengine/router/router_linux.go - build a private copy of the tailscale/tailscale container image

- verify this image with Tailscale proxy since the implementation is shared with the Operator and the scenario is simpler

- if yes, test image with Tailscale Operator

The result of working through these steps was the following private fork: https://github.com/clarkezone/tailscale/commits/nftoperatortestfix the testing of which proved very fruitful. In summary, the nfttables support worked as expected. Since others had cited this problem, I decided to be a good opensource citizen and submit a PR: https://github.com/tailscale/tailscale/pull/8749.

As the ongoing work on https://github.com/tailscale/tailscale/issues/5621 continues to land (eg https://github.com/tailscale/tailscale/pull/8762) the need for my fix will go away as the scenario will just work, but until then it’s a temporary stop-gap for those blocked on adopting the Tailscale Kubernetes Operator.

If you want to follow along you can do the following:

To try it out

- (optional) build client docker image substituting appropriate repos and tags:

PUSH=true REPOS=clarkezone/tsopfixtestclient TAGS=6 TARGET=client ./build_docker.sh - (optional) build operator docker image substituting appropriate repos and tags:

PUSH=true REPOS=clarkezone/tsopfixtestoperator TAGS=3 TARGET=operator ./build_docker.sh - Grab manifest from this branch:

curl -LO https://github.com/clarkezone/tailscale/raw/nftoperatortestfix/cmd/k8s-operator/manifests/operator.yaml - add your clientID and secret per the official instructions

- (optional) if you built and pushed your own containers, update line 130 and 152 to point to your private images

- Apply the operator manifest:

kubectl apply -f operator.yaml - apply test manifests to publish a nginx server on tailnet:

kubectl apply -f https://gist.github.com/clarkezone/b22a5851f2e4229f5fd29f1115ddee32/raw/277efaa5e099ef055eb445115dd199dc40829df2/tailscaleoperatortest.yaml - Get the endopoint address for the service on your tailnet with

kubectl get services -n tailscaletestin the external IP column, you should see a dns entry in your tailnet similar to tailscaletest-nginx-tailscale.tail967d8.ts.net, this is the endpoint your service is exposed on. - You should be able to curl the endpoint and see output from nginx:

curl tailscaletest-nginx-tailscale.tail967d8.ts.net

Adding Ingress

The ultimate solution I’ve been looking for with a Tailscale Operator type of solution is something that works at the http layer and supports DNS and SSL integration (via letsencrypt) to enable a better more secure user experience for connecting to clusters.

Over the course of writing this post, my wish came try when Maisem landed the initial PR that adds ingress support to the Tailscale Operator. This provided the final missing link I was looking for. So this post wouldn’t be complete with a quick tour of that. It’s also worth noting that because Ingress support doesn’t depend on the iptables or nftables layer, my original issue is also solved without any of the concerns I’ve articulated above. Time heals all.

In order to leverage Ingress support, the earlier example is modified by removing annotation from the service and adding an ingress manifest with a modified tailscale annotation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

apiVersion: v1

kind: Namespace

metadata:

name: tailscaletest

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-tailscale

namespace: tailscaletest

labels:

app: nginx-tailscale

spec:

selector:

matchLabels:

app: nginx-tailscale

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx-tailscale

spec:

containers:

- name: nginx-tailscale

image: nginx:1.20-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-tailscale

namespace: tailscaletest

spec:

ports:

- port: 80

selector:

app: nginx-tailscale

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-tailscale

namespace: tailscaletest

spec:

ingressClassName: tailscale

tls:

- hosts:

- "foo"

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-tailscale

port:

number: 80

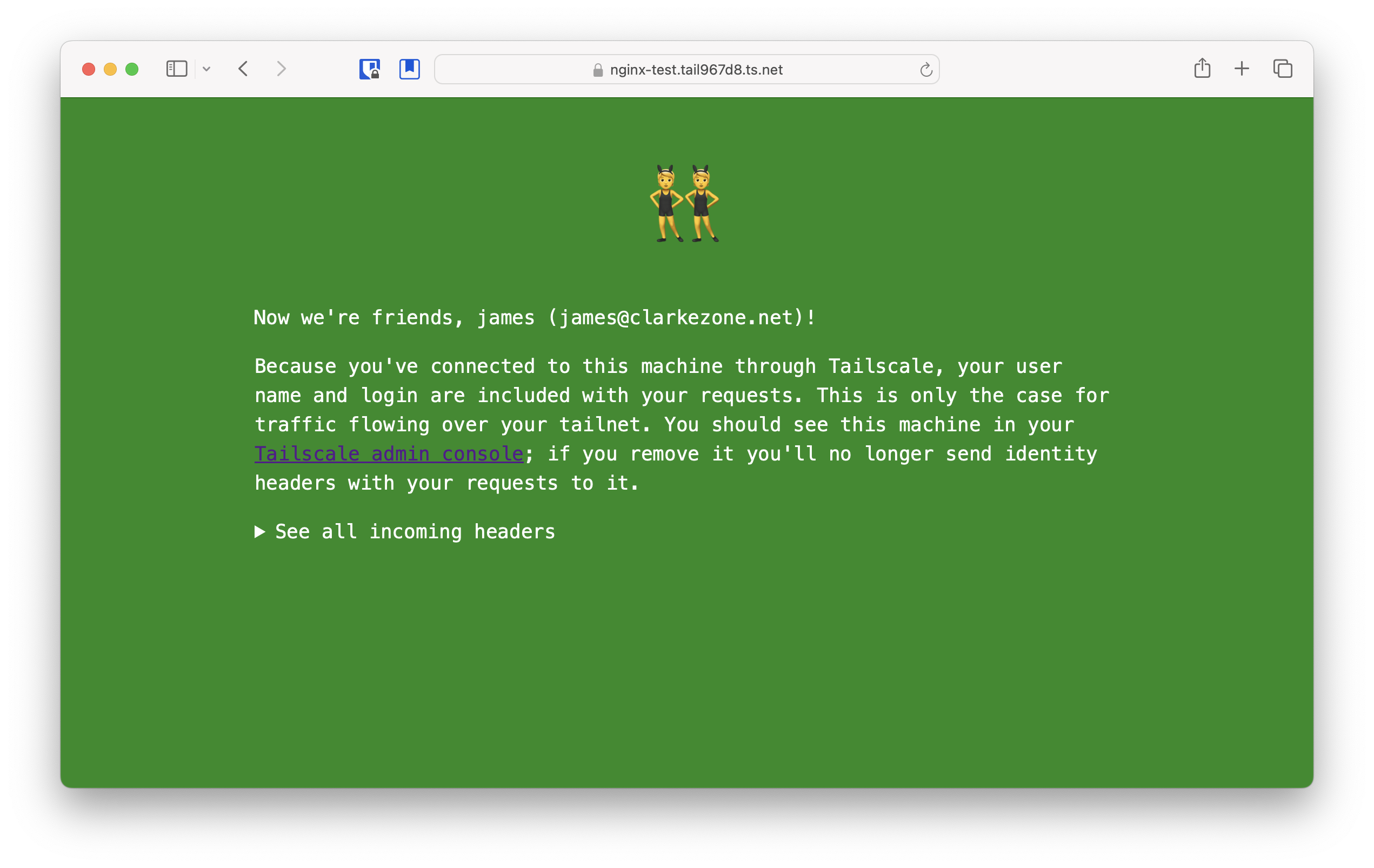

If you apply the above to a cluster with the latest operator installed, via

kubectl apply -f https://gist.github.com/clarkezone/f99ea7f0c08a4f0f7a2487cc73871b89

you will see something simlar to this:

1

2

3

k get ingress -n tailscaletest nginx-tailscale

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-tailscale tailscale * nginx-test.tailxxxx.ts.net 80, 443 7m52s

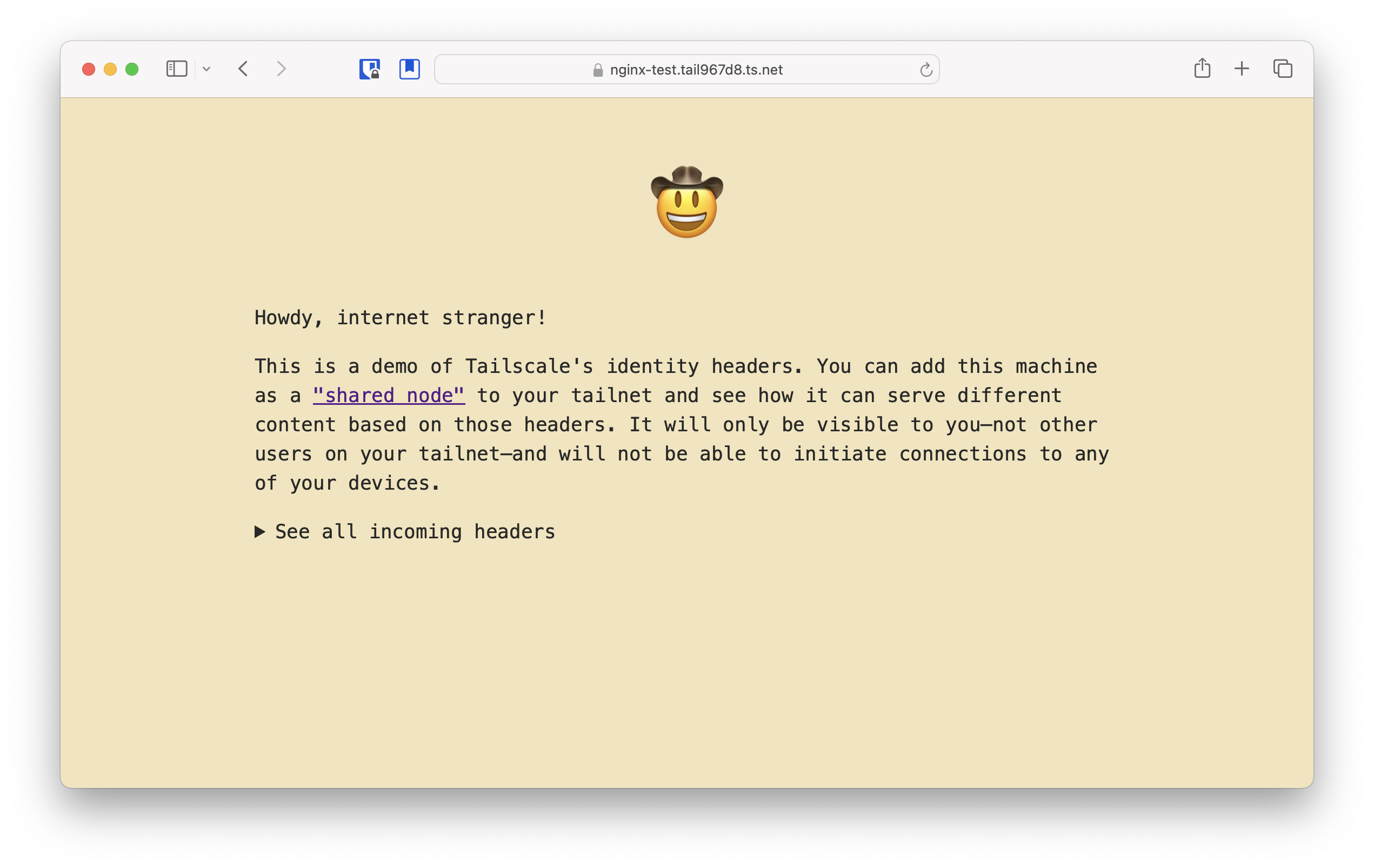

Assuming you have Tailscales’ wonderful MagicDNS enabled, you can now visit https://nginx-test.tailxxxx.ts.net from the browser of any device on your tailnet and get SSL secured access to your cluster. Mission accomplished! Thx Maisem!

Funnel

Fast forward to October and even more of the feature has now landed including support for Tailscale’s funnel functionality which allows you to route traffic from the wider internet to the cluster courtesy of the operator. The official documentation for the Tailscale operator has also been been updated with instructions for all of this goodness.

Funnel support builds on the previous ingress example, my adding a simple annotation:

1

2

annotations:

tailscale.com/funnel: "true"

In order for this to work, it’s also necessary to ensure that the correct scope is present in the Tailnet policy file as follows:

1

"target": ["autogroup:members","tag:k8s"]

with all of the above in place the same URL now works for users not on the tailnet. Your home cluster is serving traffic to the internet.

Next steps

There is an additional feature that enables Tailscale to perform the duties of an authenticating proxy for the k8s control plane which sounds interesting and I plan to try out at some point.

For the scenario of enabling one cluster to access other tailnet resources, there is also an egress proxy solution that I need to look at.

Wrap-up

Thanks for reading this far! I hope you’ve been able to learn something new. Would love to know how you get on your journey into the fun world of Containers, Kubernetes and Tailscale. Stay in touch here x Twitter or Mastodon